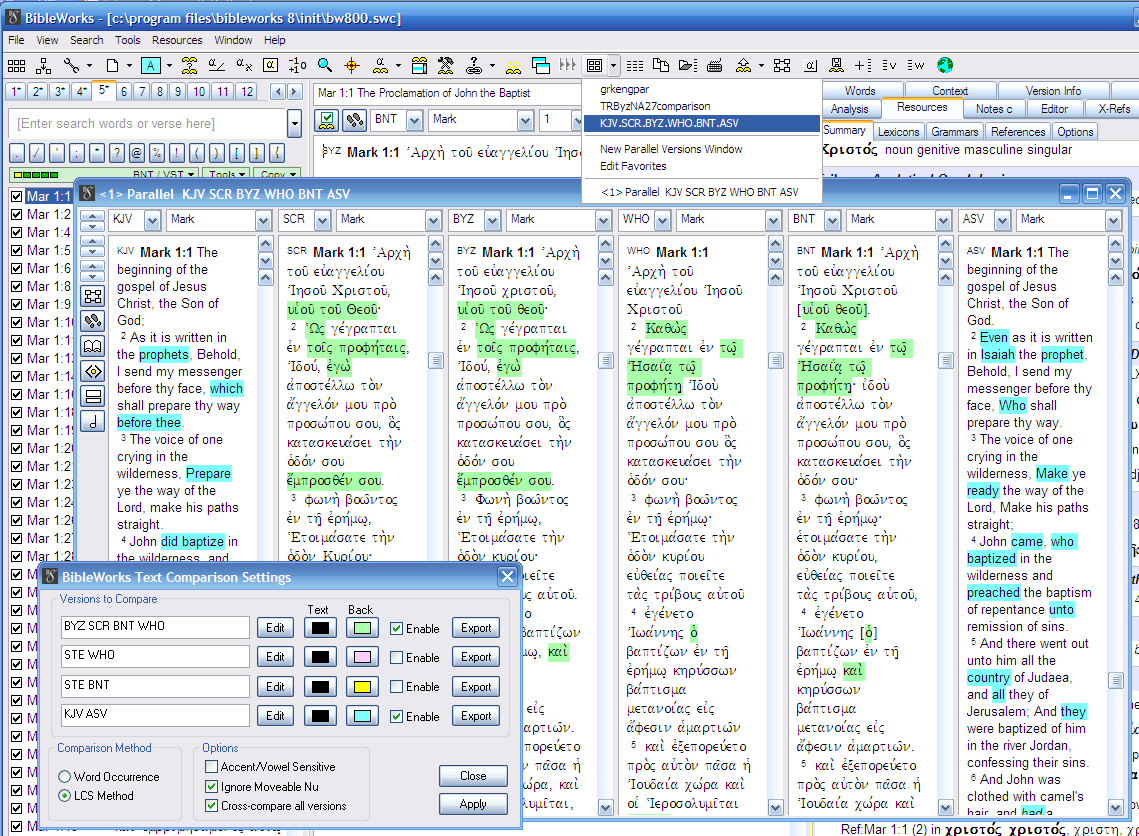

A blog post by Matthew Burgess, responding to a debate between Bart Ehrman and James White, notes that the reliability of the Greek New Testament is at least 95% even between the two most extreme text traditions (Textus Receptus vs. Westcott & Hort). That led me to this posting where I compare the tools available for comparing Greek NT textual traditions using Accordance8, BibleWorks8, Logos3, and the online Manuscript Comparator. Actually, it ended up being a rather long post with lots of graphics, so to read the full review, you can READ THIS PAGE on my Scroll and Screen site. Here, though, is my conclusion UPDATED (2009.04.07) in light of the comments:

A blog post by Matthew Burgess, responding to a debate between Bart Ehrman and James White, notes that the reliability of the Greek New Testament is at least 95% even between the two most extreme text traditions (Textus Receptus vs. Westcott & Hort). That led me to this posting where I compare the tools available for comparing Greek NT textual traditions using Accordance8, BibleWorks8, Logos3, and the online Manuscript Comparator. Actually, it ended up being a rather long post with lots of graphics, so to read the full review, you can READ THIS PAGE on my Scroll and Screen site. Here, though, is my conclusion UPDATED (2009.04.07) in light of the comments:

I am most familiar with BibleWorks, so if I have missed something significant in one of the other programs or in BW8, please let me know.Each program has its strengths and special capabilities. Manuscript Comparator does the best job of displaying differences, but it lacks the NA27 and is intended as an online resource. Accordance does a good job of display, allows for a variety of comparison options, and creates useful lists of differences. BibleWorks has the most versatility and is the fastest Windows application. Logos has the most texts available for comparison, provides numerical and graphical comparisons, and results export easily.

BOTTOM LINE:

- If you don't have any of the Bible software packages, Manuscript Comparator will achieve most of the the results you need.

- If you do own one of the programs, my best advice is to familiarize yourself with the text comparison implementation in that package.

- If you are looking to buy a Bible software program, the text comparison tools will probably not be a deciding factor, but the descriptions I provide here should make you aware of what is possible with each.

BTW, Logos provides a percentage difference between two versions. I ran a comparison of Scrivener's Textus Receptus (1894) against Westcott & Hort for the whole NT, and I came up with a 6.6% variance. (It took well over an hour to get that result.) So, a 93.4% reliable Greek NT? (And remember that this includes some spelling variations, insignificant transpositions, etc.)

Thanks Mark. For those who want to do more advanced manuscript comparisons between MSS there are other tools, e.g., Collate. You can read about it here:

ReplyDeletehttp://www.sd-editions.com/EDITION/

Note that Collate and Anastasia has been used for the NT transcript prototype (and the forthcoming digital NA28). I used Collate myself when I compared some 560 MSS in Jude.

Since you run Accordance in an emulator, it's not fair to compare the times with Windows programs run natively. For reference, the Accordance comparison of the entire Gospel of Mark, as close as I can reproduce your settings for a comparison of Mark's Gospel (list text diffs), run native on a Mac laptop, is so fast it's hard to time--maybe 1 sec.? For the entire NT it's about 4 sec.

ReplyDeleteMark,

ReplyDeleteGood post, thank you.

The "stat" that I've heard bandied about is about "viable" variants; i.e. anything that would actually impact the meaning of the text in any significant way (not movable nu's, spelling errors, etc)

bob

Mark, a couple of points about Accordance.

ReplyDelete1. Accordance will compare the first two texts of a given language, so if you were to add two panes containing English Bibles, those two would be compared as well. Heck, if you added panes containing the Delitzsch and modern Hebrew translations of the NT, those would also be compared with each other.

2. The reason we only compare two texts at a time is so that we can highlight both insertions and omissions. Besides, even if we were to highlight differences among three or more texts, you the user will inevitably end up analyzing those differences by focusing on text A and text B, then A and C, then B and C, etc.

3. You can easily change the texts which are compared by dragging a different pane into the second position. Just drag the gray area above the third Greek pane in your window to the left of the second pane, and Accordance will instantly compare that text with the first.

4. Finally, you mentioned that you can tweak the comparisons to consider or ignore case, accents, etc., but you did not mention that you can choose to compare the words as they appear in the text, their associated lexical forms (lemmas), or even their associated tags. Choosing to compare for different lemmas, for example, will enable you to quickly identify some of the more significant variants.

Thanks for doing this. As always, you do a very fair and thorough job. Now we just need to get you using a Mac! ;-)

Hey Mark,

ReplyDeleteThanks for your post and favorable review of the Manuscript Comparator, even though it is only a prototype. I have a question regarding the con you identified, the inability to save results: what feature is desired here? It currently allows the user navigate to an individual passage and select the options desired, and then their query can be bookmarked for future reference. Isn't this saving the results? I'd really like your to hear your thoughts on how this can be improved.

Mark, I have linked to this post and provided additional comments on my blog:

ReplyDeletehttp://evangelicaltextualcriticism.blogspot.com/2009/04/comparing-gnt-texts-and-manuscripts.html

Tommy: Thanks for the link. The comments on your blog post also helped me update my comments. (And yikes! I corrected all the Comfort and BARRETT.)

ReplyDeleteRod: Thanks for indicating speeds for Accordance when run on a Mac. I have now clarified that I am running Accordance under emulation.

GSYF: I didn't bother to analyze the 6.6% variance figure calculated by Logos, but I suspect that quite a few are insignificant spelling errors or transpositions, or de/kai changes.

David: Thanks for pointing out that Accordance will compare the first two texts of the same language regardless of order. I have now included a spiffy graphic in the Accordance review showing KJV - TR - WH - ASV all nicely compared. I also added some notes about the lemma and tag comparisons. (There are some minor issues here due to the differences in coding in different texts.) (BTW, I have thought about buying a cheap, used Mac just to try one out... but I resisted.)

Weston: I clarified my statement about "saving" results. Given the dynamic nature and online functioning of Manuscript Comparator, results can only be saved offline as a static graphic. I did add, as you noted, that results can indeed be bookmarked for easy recall.

Once I find a little time again, I plan to include more comparison tools including LaParola, the Digital Nestle Aland, Juxta, MS Word, and a couple others.