Find all the instances of κωμη in the gospel of Mark and create a table showing the parallel passages in Matthew or Luke.

There is a Bible search task I have long wished that Accordance or Logos (or BibleWorks back in the day) could do, but it did seem to be asking a bit much. Namely, I wanted to run a search for a word that occurs in one of the gospels and concurrently check out the parallel passages in the other gospels to see if the same wording was used.What I have done in the past is run the search to get the results and then manually compare (either just using the search results or entering passages one at a time into a parallel resources like Aland's Gospel Parallels) to see whether they matched or not.

On a whim, I decided to ask Microsoft CoPilot to run such a search for me. It worked!

Here's the prompt I used: Find all the instances of κωμη in the gospel of Mark and create a table showing the parallel passages in Matthew or Luke.

My hope was to see whether Luke 'upgraded' Mark and turned villages / towns (κωμη) into cities (πολις) similar how to coinage in Luke is upgraded compared to Mark. You can see in the graphic that it explained its methodology and then created the table. Here is the Summary it produced at the end:

When I told it to go ahead and create a slide deck it went even further generating 8 slides (including one of Theological Implications and another of Reflections that weren't bad) and created a cleaner table.

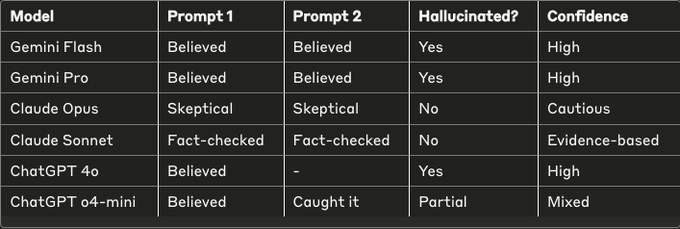

I did some other similar searches, and there were some less reliable results, largely due to trying to match up passages rather than exact verses. So, as you can expect, results need to be confirmed, but this is a really fascinating use of AI.

Another search I tried is that I searched for all the uses of the form λεγει in Mark and asked for the parallels in Matthew and Luke in order to see what they do with Mark's frequent use of the historical present. Results were not precise. It did cite Toronto's Five Gospel Parallel and the Four Gospel Harmony as sources.